A web application without sessions is hard to imagine. People use it very liberally to maintain session data, so do I. But what most people don’t know that it can cause issues with your application’s concurrency if not used properly. Even though it is an obvious thing, I never knew (or thought!) about it till today.

What’s the problem?

PHP Session Lock can block your applications concurrent requests from a single client. If a client is sending multiple requests concurrently and each requests involves session usage, each request will be served sequentially instead of processing them concurrently.

Why does this happen?

PHP, by default, use files to store session data. For each new session, PHP will create a file and keep writing session data to it. (hint: Blocking IO). So everytime when you are calling `session_start()` function, it will open your session file and it will acquire an exclusive lock on the file. So if one of your scripts is taking time to process request and your client sends another request which also requires session, it will be blocked until previous request is completed. Second request will also call session_start() but it will have to wait because first request has already acquired an exclusive lock on the session file. Once previous request is fulfilled, PHP will close session file at the end of the script execution and release the lock. Now second process will get a chance to acquire a lock on session file and proceed it’s execution.

However, this can lead to concurrency issues for same client only. Request from a client cannot block another client’s request in such case because they both will be having different sessions and hence different session files.

When can it become a bottleneck?

It will be hard to noticed this blocking period if your scripts are short (in term of execution!). But if you have slightly long running scripts, you are in trouble. This can become a bottleneck if you are working with AJAX and fetching data from several requests on the same page; which is quite common is today’s web applications.

Consider this scenario when you are fetching several data from different background AJAX requests and displaying them on UI. These requests use session. Each asynchronous request is fired immediately and together. But first requests to reach server will receive the session lock while other requests have to wait. So all these requests will be processed sequentially even though they are not dependent on each other. Taking an example, 5 requests, each taking approximately 500ms to complete, are being sent concurrently. But because of this blocking, each request is not executed concurrently and so the last, 5th, request will start executing at 5th second and will complete execution after 5.5 seconds even though it required only 500ms to process. This can be a serious problem if some of the scripts require more processing or the number of the requests are greater.

I wouldn’t have noticed this if I hadn’t added `sleep(2);` in my code on local machine to simulate natural use from a slow connection. My page was sending 5 requests and each request was being severed every 2 seconds, sequentially!

So what’s the solution?

Close sessions once you are done using it!

PHP has this method to close session write: `session_write_close()`. Calling this method will end current session and write session data to file and release the lock to the file. So it will not block further requests even if current script is still pending processing.

Important thing to note is that once you close session using `session_write_close()`, you will not be use session further in the current script which is being executed.

How do I simulate this problem?

If you want to see this problem in action, try following code:

Blocking Example

Now send 5 ajax requests to this file. Example code with jQuery:

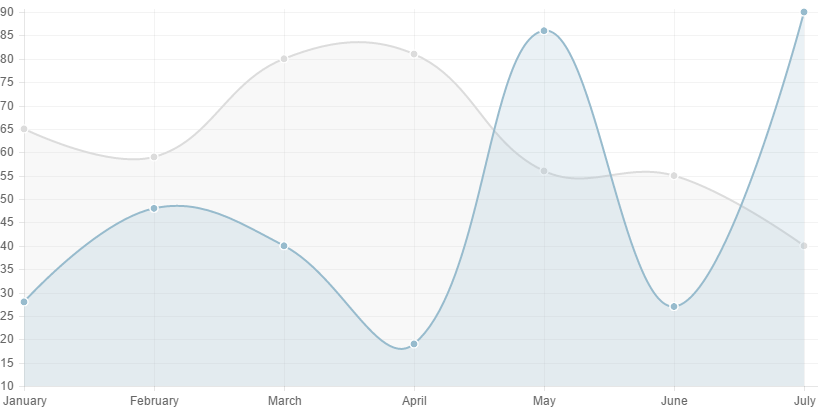

Send Log:

Complete Log:

Non-Blocking Example

Now send 5 ajax requests to this file. Example code with jQuery:

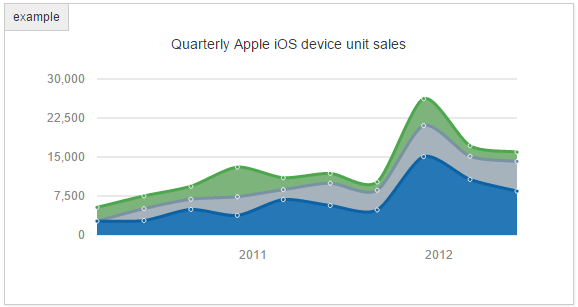

Send Log: Send Log:

Complete Log:

You will be able to see how this small thing can greatly affect your application.