If you Web Application grows or it involves a lot of computations to generate dynamic content, performance is going to get poor. There are some data why requires plenty of calculation but they do not change on every request. It’s wise to start caching such data or objects. Recently, in one of the application I have been working, there was considerably unnecessary delay because there were some heavy data that were being calculated every time a user sends a request. And it was a high time I should’ve started caching those results.

There are plenty of extensions and frameworks available for caching in PHP. But instead of spending my time looking for best extension/ framework and learning it, I decided to implement cache on my own without using any fancy things.

I am going to explain two-way of caching of the content:

- Server Side Caching

- Client Side Caching

Server Side Caching

In server-side caching, we are going to cache those data and/or objects on server and use them the next time user requests for it instead of recalculating those data.

For caching these things, we are going to use files. I assume, your calculation is going to take more time than reading a file, otherwise there is no point in using caching. What we are going to do is that we will write each calculated data or object inside a file and store it on the server.

It’s always a good idea to define few constants or configuration variables for few settings. You should be having at least following few parameters in the configuration. I would make a config file with following constants:

- CACHE_ENABLED: A parameter to turn on or off the caching

- CACHE_PATH: Path to your cache directory. I prefer to create a `cache` directory in my Application Root. If you are on Linux, don’t forgot to give your server write permission on cache folder

- CACHE_EXPIRY: A parameter that force the cache to be invalidated after specified time from the creation of the cache.

php

//! Config parameter to turn on/off the caching

define("CACHE_ENABLED", true);

//! Path to store your cache files

define("CACHE_PATH", "/var/www/YourApp/cache/");

//! Cache expiration time

define("CACHE_EXPIRY", 3600);

?>

Now come the real caching part. Before you start caching, there are few choices that you need to make considering various factors.

- What data are you going to cache? Well, don’t cache something that requires less time computing than reading or writing it to a file. You should also not cache something which changes on each request.

- When do you need to purge a cache? It is as important to know when you should purge your cache. Make list of all the events or condition that can invalidate your cache. If you are not purging the data on appropriate events or condition, your application may have inconsistent or corrupted data or state.

Once you have decided on these things, you should start caching things. We will be computing the object and storing it inside the file. It a wise thing to use a proper naming convention for storing objects. A good naming convention will make your task for purging cache easier.

In my application, there were few types of objects. And all these object’s value were different for each users. So I decided to use this follow convention: `{OBJ_TYPE}_{OBJ_TYPE_ID}_uid_{UID}.cache`

{UID} is User ID of a user. So in case when cache for a particular object becomes invalid, I can delete all files that matches `{OBJ_TYPE}_{OBJ_TYPE_ID}_*.cache`

And in case if all cache for a single user expires, I can delete all files that matches `*_uid_{UID}.cache`

Now let’s start caching object. Suppose we are serving an expensive data as a JSON for some AJAX request. Here is an example of such sample snippet:

<?php

require_once "loader.php"

$aExpensiveData = null;

if(CACHE_ENABLED) {

//! Calculate Cache path based on your naming convention

$sCachePath = CACHE_PATH."obj_{$oid}_uid_{$iUserID}.cache";

if(file_exists($sCachePath)) {

//! Get the last-modified-date of this very file

$lastModified=filemtime($sCachePath);

//! Calculate the expiry

$sExpiry = time() - CACHE_EXPIRY*3600;

//!Purge the cache if cache is older the foced expiry

if($lastModified<$sExpiry) {

unlink($sCachePath);

}

else {

$aExpensiveData = unserialize(file_get_contents($sCachePath));

}

}

}

//! If Cache Miss, let's do the expensive calculation

if($aExpensiveData===null) {

$aExpensiveData = doExpensiveCalculation();

//! Cache the result if cache enabled

if(CACHE_ENABLED) {

$sCachePath = CACHE_PATH."obj_{$oid}_uid_{$iUserID}.cache";

//! Don't forget to serialize the object, before writing

file_put_contents($sCachePath, serialize($aExpensiveData));

}

}

header('Content-Type: application/json');

$json = json_encode($aExpensiveData );

echo $json;

?>

If you look at the code, you are first checking if cache is already available. If cache is available and still valid, use it instead of doing the expensive computation. After doing expensive computation, we are caching the result so that next time we don’t miss a cache.

Client Side Caching

Well, you just saved a great amount of computing power by not recalculating an expensive object. You are saving computation time by using server-side caching. What if I tell you that you can save bandwidth and data transfer delay by using client side caching?

We are still considering the previous example of an AJAX request that expects expensive object in JSON format. Now suppose, that’s an expensive as well as a big object. It surely takes time to transfer the object from server to client and you are also exhausting using your network’s bandwidth.

You can start using Client Side Caching to save bandwidth and data-transfer delay. Data transfer delay may be slowing your application’s response for clients with slower internet connection. They will benefit much from this.

To cache a “page” on client, you need to tell client that this page is valid up to certain hours or days. But again, you don’t want your client to use wrong data in case some event has invalidated that data. So we will ask client to always validate its local cache before using it. So, to let your client’s browser do this, it’s important to send, Last-Modified and Expires HTTP Header properly.

Let’s implement Client Side Caching in the previous example:

<?php

require_once "loader.php"

$aExpensiveData = null;

if(CACHE_ENABLED) {

//! Calculate Cache path based on your naming convention

$sCachePath = CACHE_PATH."obj_{$oid}_uid_{$iUserID}.cache";

if(file_exists($sCachePath)) {

//! Get the last-modified-date of this very file

$lastModified=filemtime($sCachePath);

//! Calculate the expiry

$sExpiry = time() - CACHE_EXPIRY*3600;

if($lastModified<$sExpiry) {

unlink($sCachePath);

$lastModified = time();

}

//Get the HTTP_IF_MODIFIED_SINCE header if set

$ifModifiedSince=(isset($_SERVER['HTTP_IF_MODIFIED_SINCE']) ? $_SERVER['HTTP_IF_MODIFIED_SINCE'] : false);

//Set last-modified header.

header("Last-Modified: ".gmdate("D, d M Y H:i:s", $lastModified)." GMT");

//tell client to revalidate local cache before using it

header('Cache-Control: must-revalidate');

header('Expires: '.gmdate('D, d M Y H:i:s \G\M\T', $lastModified + CACHE_EXPIRY*3600));

//check if page has changed. If not, send 304 and exit. Client will use it's own cache

if (strtotime($_SERVER['HTTP_IF_MODIFIED_SINCE'])==$lastModified)

{

header("HTTP/1.1 304 Not Modified");

exit;

}

//! It didn't exit, that mean client doesn't havethe latest copy your data.

$aExpensiveData = unserialize(file_get_contents($sCachePath));

}

}

//! If Cache Miss, let's do the expensive calculation

if($aExpensiveData===null) {

$aExpensiveData = doExpensiveCalculation();

//! Cache the result if cache enabled

if(CACHE_ENABLED) {

//! Don't forget to serialize the object, before writing

file_put_contents($sCachePath, serialize($aExpensiveData));

//Set last-modified header.

header("Last-Modified: ".gmdate("D, d M Y H:i:s", $lastModified)." GMT");

//tell client to revalidate local cache before using it

header('Cache-Control: must-revalidate');

header('Expires: '.gmdate('D, d M Y H:i:s \G\M\T', $lastModified + CACHE_EXPIRY*3600));

}

}

header('Content-Type: application/json');

$json = json_encode($aExpensiveData );

echo $json;

?>

This time, we are setting Last-Modified header to time of the creation of cache. And we are setting Expires based on CACHE_EXPIRY.

If client has a local cache, it’s modification date will be set in `$_SERVER[‘HTTP_IF_MODIFIED_SINCE’] `

Thus you will only send HTTP Code 304 saying “Content isn’t modified, use your local cache.”.

These examples are targeting a single scenario. But it’s easy to use same concept in any scenario with little modifications because techniques remains same.

Extent of the benefit of using cache will greatly depend on what you are caching, how expensive your calculation is and how often your cache becomes invalid. I got several fold performance improvement in my application after implementing these cache techniques. Feel free to drop your questions and feedback in comments.

PS: These examples are very quick and dirty examples of these techniques. Main motive of this blog post and examples in it is to make Developers familiar with the caching techniques. If your application is going to use caching seriously, I suggest you to invest some time in learning popular extensions or frameworks. And if you are planning to implement your own caching, it’s better to define proper classes and methods to make your code more structured and easy to maintain.

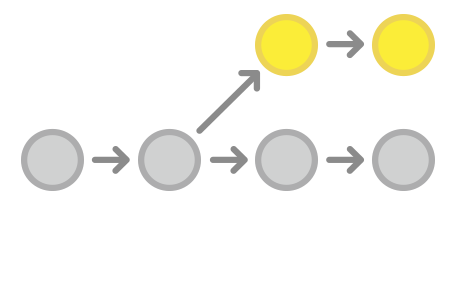

You can’t really imagine working on a project without using a version control system. Git is the best bet for me. This is an interesting story of how a wrong merge and then revert messed our codebase.

You can’t really imagine working on a project without using a version control system. Git is the best bet for me. This is an interesting story of how a wrong merge and then revert messed our codebase.